Next: 3 Computing Performance Up: MPI_XSTAR: MPI-based Parallelization of Previous: 1 Introduction

MPI_XSTAR, a parallel-manager program, has been written in C++ using the high-performance computing industry standard MPI library (e.g.. Gropp et al., 1999). The MPI_XSTAR source code is freely available under GPLv3 license on GitHub5. MPI_XSTAR is designed to be used on a cluster or a multi-core machine composed of multiple CPUs (central processor units) that enables an easy generation of multiplicative tabulated models for spectral modeling tools. The code has initially been developed and examined on the ODYSSEY cluster at Harvard University. MPI_XSTAR utilizes XSTINITABLE and XSTAR2TABLE, similar to XSTAR2XSPEC and PVM_XSTAR. It uses the MPI library to run the XSTAR calling commands on a number of CPUs (e.g., specified by the np parameter value of mpirun).

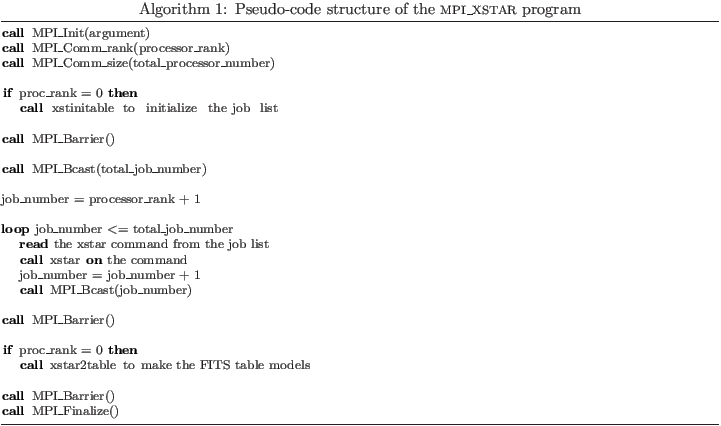

An outline of the implementation of an MPI-based parallelization of XSTAR, MPI_XSTAR, is summarized in a pseudo-code in Algorithm 1. The program begins with the generation of XSTAR calling commands for the variation of the input parameters and an initial FITS file using XSTINITABLE in the master processor (rank zero). It passes the command argument [returned by an MPI_Init() call] to XSTINITABLE to create the XSTAR calling commands file (xstinitable.lis) and the initial FITS file (xstinitable.fits). It makes three copies of the initial FITS file, which will be used by XSTAR2TABLE at the end to create three table files (xout_mtable.fits, xout_ain.fits, and xout_aout.fits). While the master processor performs the initialization for a parallel setup of XSTAR, other processors are blocked [by using an MPI_Barrier() call] and waited. After the master processor completes its initialization, it broadcasts the number of XSTAR calling commands to other processors [by an MPI_Bcast() call], that allows each processor to know which XSTAR calling command should be executed.

After each processor is allocated to an initial calling order based on the rank value [returned by an MPI_Comm_rank() call], the program begins to read the XSTAR calling command from the initialization file (xstinitable.lis) and execute it by calling the XSTAR program. The calling order of XSTAR commands, which is being executed by a processor, is sent to other processors [by an MPI_Bcast() call], so it will not be run by them. Each processor is being blocked until its XSTAR computation is done. After a processor completes its current XSTAR command, it then reads the next calling command and executes a new XSTAR command. When there is no calling command for execution by a processor, those processors, which finish their task, are blocked [by an MPI_Barrier() call] and waited for those processors whose XSTAR commands are still running.

After all XSTAR calling commands are executed, the master processor invokes XSTAR2TABLE upon the results of these XSTAR computations in order to generate multiplicative tabulated models, while other processors are blocked [by an MPI_Barrier() call]. The FTOOLS program XSTAR2TABLE uses the XSTAR outputs in each folder, and adds them to table files (xout_mtable.fits, xout_ain.fits, and xout_aout.fits) created from the initial FITS file in the initialization step. For a job list containing ![]() XSTAR calling commands, it is required to execute

XSTAR calling commands, it is required to execute ![]() runs of XSTAR2TABLE upon the results in each folder to generate multiplicative tabulated models. As this procedure is very quick, it is done by the master processor in a serial mode (on a single CPU). After tabulated model FITS files are produced by the master processor, all processors are unblocked and are terminated [by an MPI_Finalize() call].

MPI_XSTAR outputs include the standard output log file (XSTAR2XSPEC.LOG; similar to the script XSTAR2XSPEC) and the error report file for certain failure conditions such as the absence of the xstar commands file (xstinitable.lis) and the initial FITS file (xstinitable.fits).

runs of XSTAR2TABLE upon the results in each folder to generate multiplicative tabulated models. As this procedure is very quick, it is done by the master processor in a serial mode (on a single CPU). After tabulated model FITS files are produced by the master processor, all processors are unblocked and are terminated [by an MPI_Finalize() call].

MPI_XSTAR outputs include the standard output log file (XSTAR2XSPEC.LOG; similar to the script XSTAR2XSPEC) and the error report file for certain failure conditions such as the absence of the xstar commands file (xstinitable.lis) and the initial FITS file (xstinitable.fits).

Ashkbiz Danehkar